Raytracing in one weekend in c++

GitHub Repository

Welcome to my adventure following the raytracing in one weekend series in C++.

Part 9

(Final Render of book 1)

Positionable Camera

Camera Viewing Geometry

First, we’ll keep the rays coming from the origin and heading to the z = -1 plane.

Camera.h

#pragma once

#include "Color.h"

#include "Hittable.h"

class Camera

{

public:

Camera() = default;

Camera(double imageWidth, double ratio, int samplePerPixel = 10, int bounces = 10, double vFoV = 90):

aspectRatio(ratio), width(imageWidth), sampleCount(samplePerPixel), maxBounces(bounces), verticalFoV(vFoV){}

void Render(const Hittable& rWorld);

private:

int height;

double aspectRatio, width;

int sampleCount;

int maxBounces;

double verticalFoV;

Position center, originPixelLocation;

Vector3 pixelDeltaX, pixelDeltaY;

void Initialize();

Color RayColor(const Ray& rRay, int bouncesLeft, const Hittable& rWorld) const;

Ray GetRay(int x, int y) const;

Vector3 PixelSampleSquared() const;

};

Camera.cpp

void Camera::Initialize()

{

height = static_cast<int>(width / aspectRatio);

if(height < 1) height = 1;

center = Position(0, 0, 0);

double focalLength = 1;

double theta = DegToRad(verticalFoV);

double h = tan(theta / 2);

double viewportHeight = 2 * h * focalLength;

double viewportWidth = viewportHeight * (static_cast<double>(width)/height);

Vector3 viewportX = Vector3(viewportWidth, 0, 0);

Vector3 viewportY = Vector3(0, -viewportHeight, 0); // invert Y

// Delta vector between pixels

pixelDeltaX = viewportX / width;

pixelDeltaY = viewportY / height;

//Position of the top left pixel

Vector3 viewportOrigin = center - Vector3(0, 0, focalLength) - viewportX / 2 - viewportY / 2;

originPixelLocation = viewportOrigin + 0.5 * (pixelDeltaX + pixelDeltaY);

}

Test it out & see how it goes

Raytracing.cpp

int main(int argc, char* argv[])

{

// World

HittableCollection world;

double radius = cos(pi / 4);

shared_ptr<Material> leftMat = make_shared<LambertianMaterial>(Color(0, 0, 1));

shared_ptr<Material> rightMat = make_shared<LambertianMaterial>(Color(1, 0, 0));

world.Add(make_shared<Sphere>(Position(-radius,0,-1), radius, leftMat));

world.Add(make_shared<Sphere>(Position(radius, 0,-1), radius, rightMat));

Camera camera(400, 16.0/9.0, 100, 50, 90);

camera.Render(world);

return 0;

}

We will start by setting up the point in space where the camera is located and the rotation of the camera to test our scene from a different point of view.

We will also add a parameter for the tilt of our camera, so we need to know which way is up.

let's add Field of view

Camera.h

class Camera

{

public:

Camera() = default;

Camera(double imageWidth, double ratio, int samplePerPixel = 10, int bounces = 10, double vFoV = 90):

aspectRatio(ratio), width(imageWidth), sampleCount(samplePerPixel), maxBounces(bounces), verticalFoV(vFoV){}

void SetTransform(Position origin = Position(0, 0, 0), Position lookAt = Vector3(0, 0, -1), Vector3 upDirection = Vector3(0, 1, 0));

void Render(const Hittable& rWorld);

private:

int height;

double aspectRatio, width;

int sampleCount;

int maxBounces;

double verticalFoV;

Position position, target;

Vector3 viewUp;

Vector3 right, up, forward;

Position center, originPixelLocation;

Vector3 pixelDeltaX, pixelDeltaY;

void Initialize();

Color RayColor(const Ray& rRay, int bouncesLeft, const Hittable& rWorld) const;

Ray GetRay(int x, int y) const;

Vector3 PixelSampleSquared() const;

};

Camera.cpp

void Camera::Initialize()

{

height = static_cast<int>(width / aspectRatio);

if(height < 1) height = 1;

center = position;

double focalLength = (position - target).Length();

double theta = DegToRad(verticalFoV);

double h = tan(theta / 2);

double viewportHeight = 2 * h * focalLength;

double viewportWidth = viewportHeight * (static_cast<double>(width)/height);

forward = Unit(position - target);

right = Unit(Cross(viewUp, forward));

up = Cross(forward, right);

Vector3 viewportX = viewportWidth * right;

Vector3 viewportY = viewportHeight * -up; // invert Y

// Delta vector between pixels

pixelDeltaX = viewportX / width;

pixelDeltaY = viewportY / height;

// Position of the top left pixel

Vector3 viewportOrigin = center - (focalLength * forward) - viewportX / 2 - viewportY / 2;

originPixelLocation = viewportOrigin + 0.5 * (pixelDeltaX + pixelDeltaY);

}

void Camera::SetTransform(Position origin, Position lookAt, Vector3 upDirection)

{

position = origin;

target = lookAt;

viewUp = upDirection;

}

now to test it out

Raytracing.cpp

int main(int argc, char* argv[])

{

// World

HittableCollection world;

shared_ptr<Material> groundMat = make_shared<LambertianMaterial>(Color(0.8, 0.8, 0.0));

shared_ptr<Material> centerMat = make_shared<LambertianMaterial>(Color(0.1, 0.2, 0.5));

shared_ptr<Material> leftMat = make_shared<DialectricMaterial>(1.5);

shared_ptr<Material> rightMat = make_shared<MetalMaterial>(Color(0.8, 0.6, 0.2), 0.0);

world.Add(make_shared<Sphere>(Position(0,-100.5,-1), 100, groundMat));

world.Add(make_shared<Sphere>(Position(0,0,-1), 0.5, centerMat));

world.Add(make_shared<Sphere>(Position(-1,0,-1), 0.5, leftMat));

world.Add(make_shared<Sphere>(Position(-1,0,-1), -0.4, leftMat));

world.Add(make_shared<Sphere>(Position(1, 0,-1), 0.5, rightMat));

Camera camera(400, 16.0/9.0, 100, 50, 90); // Here

camera.SetTransform(Position(-2, 2, 1), Position(0, 0, -1), Vector3(0, 1, 0)); // and Here

camera.Render(world);

return 0;

}

Defocus Blur

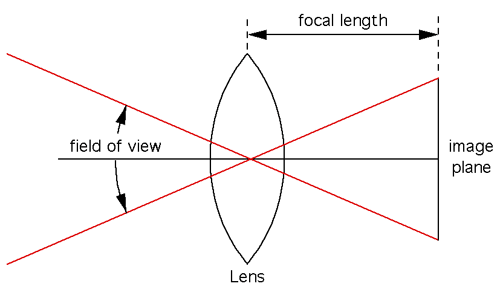

Thin lens in Cameras :

img. Source

If you wanna know more about lenses go here

Thin Lens Approximation

We could simulate how a real camera work by using its sensor, lens and aperture parameters and then flip the image (as the lens renders the picture upside down).

Instead, we use an approximation that is easier to use and close enough to the desired result.

Let's get a random point on a disk

Vector.h

inline Position RandomInUnitSphere()

{

while(true)

{

Position position = Vector3::Random(-1, 1);

if(position.SquaredLength() < 1) return position;

}

}

inline Vector3 RandomInUnitDisk()

{

while(true)

{

Vector3 position = Vector3(RandomDouble(-1, 1), RandomDouble(-1, 1), 0);

if(position.SquaredLength() < 1)

return position;

}

}

Complete the cam with focus

Camera.h

#pragma once

#include "Color.h"

#include "Hittable.h"

class Camera

{

public:

Camera() = default;

Camera(double imageWidth, double ratio, int samplePerPixel = 10, int bounces = 10, double vFoV = 90):

aspectRatio(ratio), width(imageWidth), sampleCount(samplePerPixel), maxBounces(bounces), verticalFoV(vFoV), defocusAngle(0), focusDistance(10){}

void SetTransform(Position origin = Position(0, 0, 0), Position lookAt = Vector3(0, 0, -1), Vector3 upDirection = Vector3(0, 1, 0));

void SetFocus(double angle = 0, double distance = 10);

void Render(const Hittable& rWorld);

private:

int height;

double aspectRatio, width;

int sampleCount;

int maxBounces;

double verticalFoV;

double defocusAngle, focusDistance;

Position position, target;

Vector3 viewUp;

Vector3 right, up, forward;

Vector3 defocusDiskX, defocusDiskY;

Position center, originPixelLocation;

Vector3 pixelDeltaX, pixelDeltaY;

void Initialize();

Color RayColor(const Ray& rRay, int bouncesLeft, const Hittable& rWorld) const;

Ray GetRay(int x, int y) const;

Vector3 PixelSampleSquared() const;

Position DefocusDiskSample() const;

};

Camera.cpp

void Camera::SetFocus(double angle, double distance)

{

defocusAngle = angle;

focusDistance = distance;

}

Position Camera::DefocusDiskSample() const

{

Position position = RandomInUnitDisk();

return center + (position.x * defocusDiskX) + (position.y * defocusDiskY);

}

Ray Camera::GetRay(int x, int y) const

{

// Get a randomly sampled camera ray for the pixel at location i, j.

Vector3 pixelCenter = originPixelLocation + (x * pixelDeltaX) + (y * pixelDeltaY);

Vector3 pixelSample = pixelCenter + PixelSampleSquared();

Position rayOrigin = defocusAngle <= 0 ? center : DefocusDiskSample();

Vector3 rayDirection = pixelSample - rayOrigin;

return Ray(rayOrigin, rayDirection);

}

and

Camera.cpp

void Camera::Initialize()

{

height = static_cast<int>(width / aspectRatio);

if(height < 1) height = 1;

center = position;

double theta = DegToRad(verticalFoV);

double h = tan(theta / 2);

double viewportHeight = 2 * h * focusDistance;

double viewportWidth = viewportHeight * (static_cast<double>(width)/height);

forward = Unit(position - target);

right = Unit(Cross(viewUp, forward));

up = Cross(forward, right);

Vector3 viewportX = viewportWidth * right;

Vector3 viewportY = viewportHeight * -up; //We invert Y

//Delta vector between pixels

pixelDeltaX = viewportX / width;

pixelDeltaY = viewportY / height;

//Position of the top left pixel

Vector3 viewportOrigin = center - (focusDistance * forward) - viewportX / 2 - viewportY / 2;

originPixelLocation = viewportOrigin + 0.5 * (pixelDeltaX + pixelDeltaY);

//Calculate the camera defocus disk basis vectors

double defocusRadius = focusDistance * tan(DegToRad(defocusAngle / 2));

defocusDiskX = right * defocusRadius;

defocusDiskY = up * defocusRadius;

}

test it out

Raytracing.cpp

int main(int argc, char* argv[])

{

// World

HittableCollection world;

shared_ptr<Material> groundMat = make_shared<LambertianMaterial>(Color(0.8, 0.8, 0.0));

shared_ptr<Material> centerMat = make_shared<LambertianMaterial>(Color(0.1, 0.2, 0.5));

shared_ptr<Material> leftMat = make_shared<DielectricMaterial>(1.5);

shared_ptr<Material> rightMat = make_shared<MetalMaterial>(Color(0.8, 0.6, 0.2), 0.0);

world.Add(make_shared<Sphere>(Position(0,-100.5,-1), 100, groundMat));

world.Add(make_shared<Sphere>(Position(0,0,-1), 0.5, centerMat));

world.Add(make_shared<Sphere>(Position(-1,0,-1), 0.5, leftMat));

world.Add(make_shared<Sphere>(Position(-1,0,-1), -0.4, leftMat));

world.Add(make_shared<Sphere>(Position(1, 0,-1), 0.5, rightMat));

Camera camera(400, 16.0/9.0, 100, 50, 20);

camera.SetTransform(Position(-2, 2, 1), Position(0, 0, -1), Vector3(0, 1, 0));

camera.SetFocus(10.0, 3.4);

camera.Render(world);

return 0;

}

Time for the final render of Book 1

Raytracing.cpp

int main(int argc, char* argv[])

{

// World

HittableCollection world;

shared_ptr<Material> groundMat = make_shared<LambertianMaterial>(Color(0.5, 0.5, 0.5));

world.Add(make_shared<Sphere>(Position(0, -1000, 0), 1000, groundMat));

for(int i = -11; i < 11; i ++)

{

for(int j = -11; j < 11; j++)

{

double materialChoice = RandomDouble();

Position center(i + 0.9 * RandomDouble(), 0.2, j + 0.9 * RandomDouble());

if((center - Position(4, 0.2, 0)).Length() > 0.9)

{

shared_ptr<Material> chosenMat;

if(materialChoice < 0.8)

{

Color albedo = Color::Random() * Color::Random();

chosenMat = make_shared<LambertianMaterial>(albedo);

}

...

else if(materialChoice < 0.95)

{

Color albedo = Color::Random(0.5, 1);

double fuzz = RandomDouble(0, 0.5);

chosenMat = make_shared<MetalMaterial>(albedo, fuzz);

} else

{

chosenMat = make_shared<DialectricMaterial>(1.5);

}

world.Add(make_shared<Sphere>(center, 0.2, chosenMat));

}

}

}

Camera camera(1200, 16.0/9.0, 500, 50, 20);

camera.SetTransform(Position(13, 2, 3), Position(0, 0, 0), Vector3(0, 1, 0));

camera.SetFocus(0.6, 10.0);

camera.Render(world);

return 0;

}

And it should look like :

.png)